llama.cpp: Быстрый старт

-

Загрузите последнюю версию llama.cpp под свою платформу: https://github.com/ggerganov/llama.cpp/releases Например, для Windows с поддержкой GPU необходимо скачать: llama-b4458-bin-win-cuda-cu12.4-x64.zip + cudart-llama-bin-win-cu12.4-x64.zip.

-

Извлеките файлы из скаченных архивов. Если вы скачали файлы cudart, поместите файлы dll в папку с распакованными файлами llama.cpp.

-

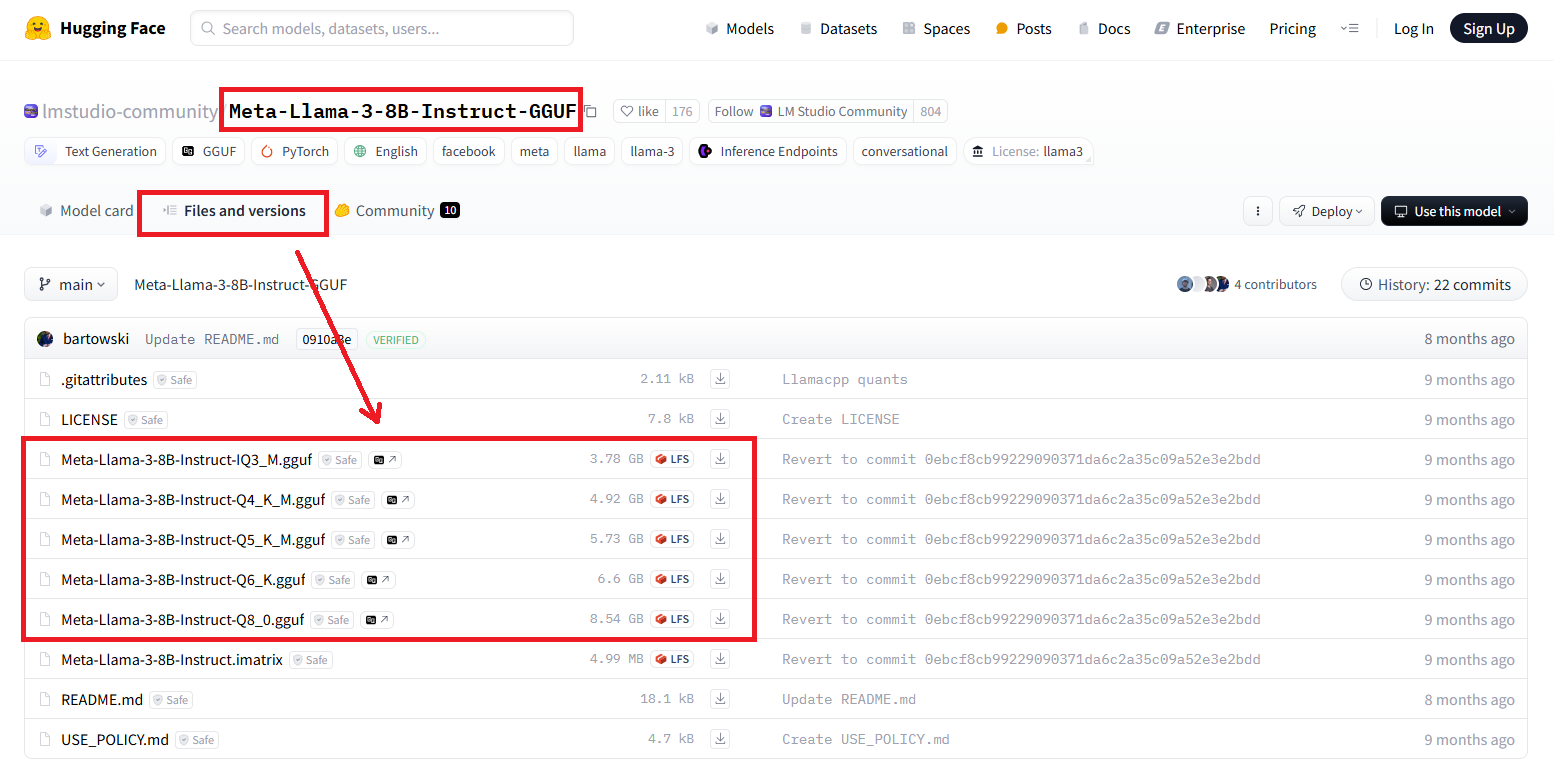

Найдите и скачайте файлы моделей (LLM) в формате guff: https://huggingface.co/models?search=gguf.

Например, https://huggingface.co/lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF/tree/main:

-

Запустите командную строку в папке llama.cpp и выполните следующую команду:

llama-cli -m model.gguf -p "Ты умный помогатель. Помогай чем только сможешь." -cnv -

Наслаждайтесь!